Fusion Odyssey

Interactive VJ experience in Virtual Reality

-- Role --

Design Engineer

-- team --

Individual

-- Timeline --

Nov, 2022 -- Jan, 2023

Personal side project

Personal side project

-- Background --

This was a personal side project driven by my interest in exploring real-time rendering for live performances.

-- Skills --

Unreal Engine, Cinema 4D

-- Problem Definition --

-- HMW --

-- Ideation --

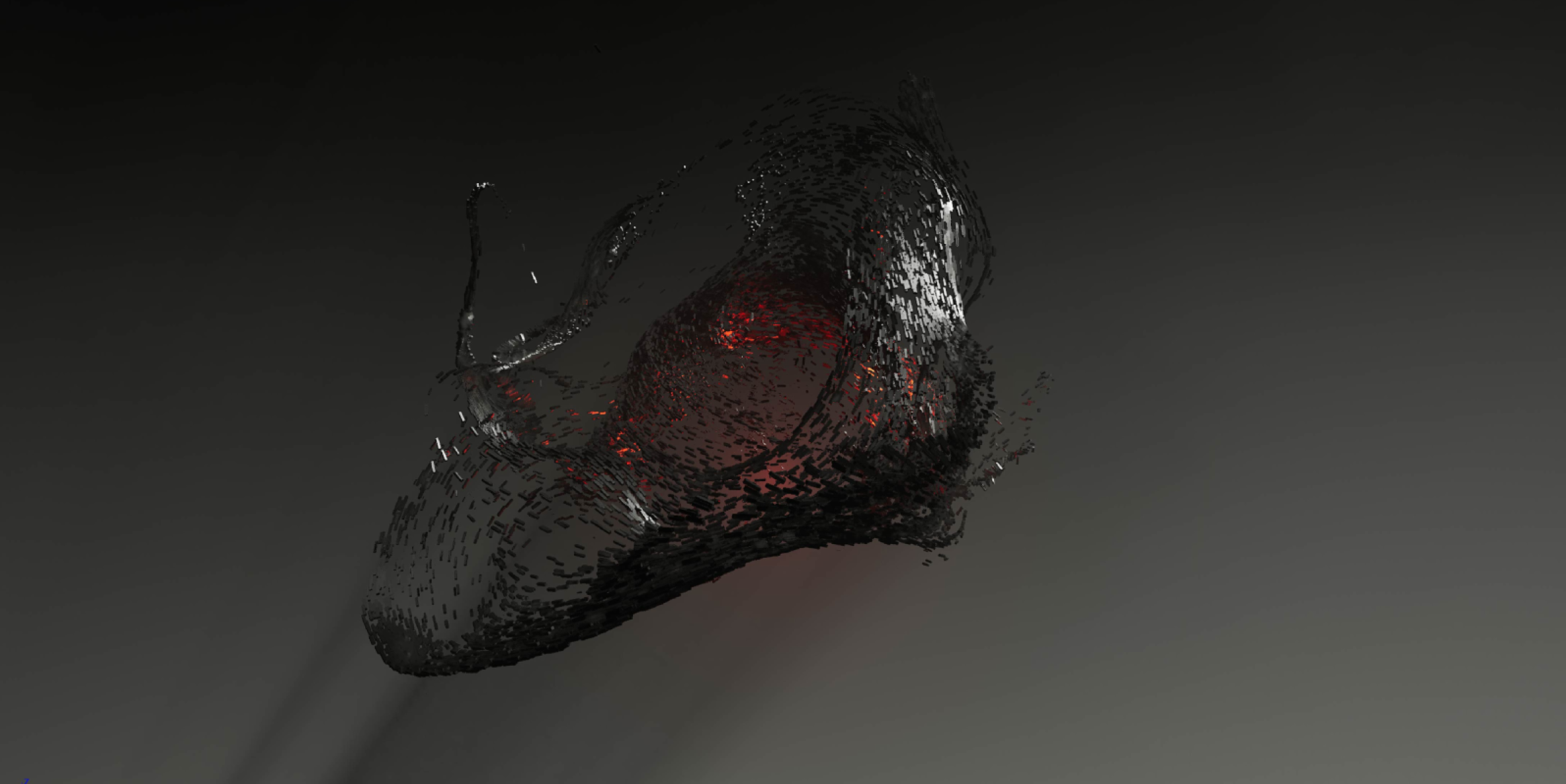

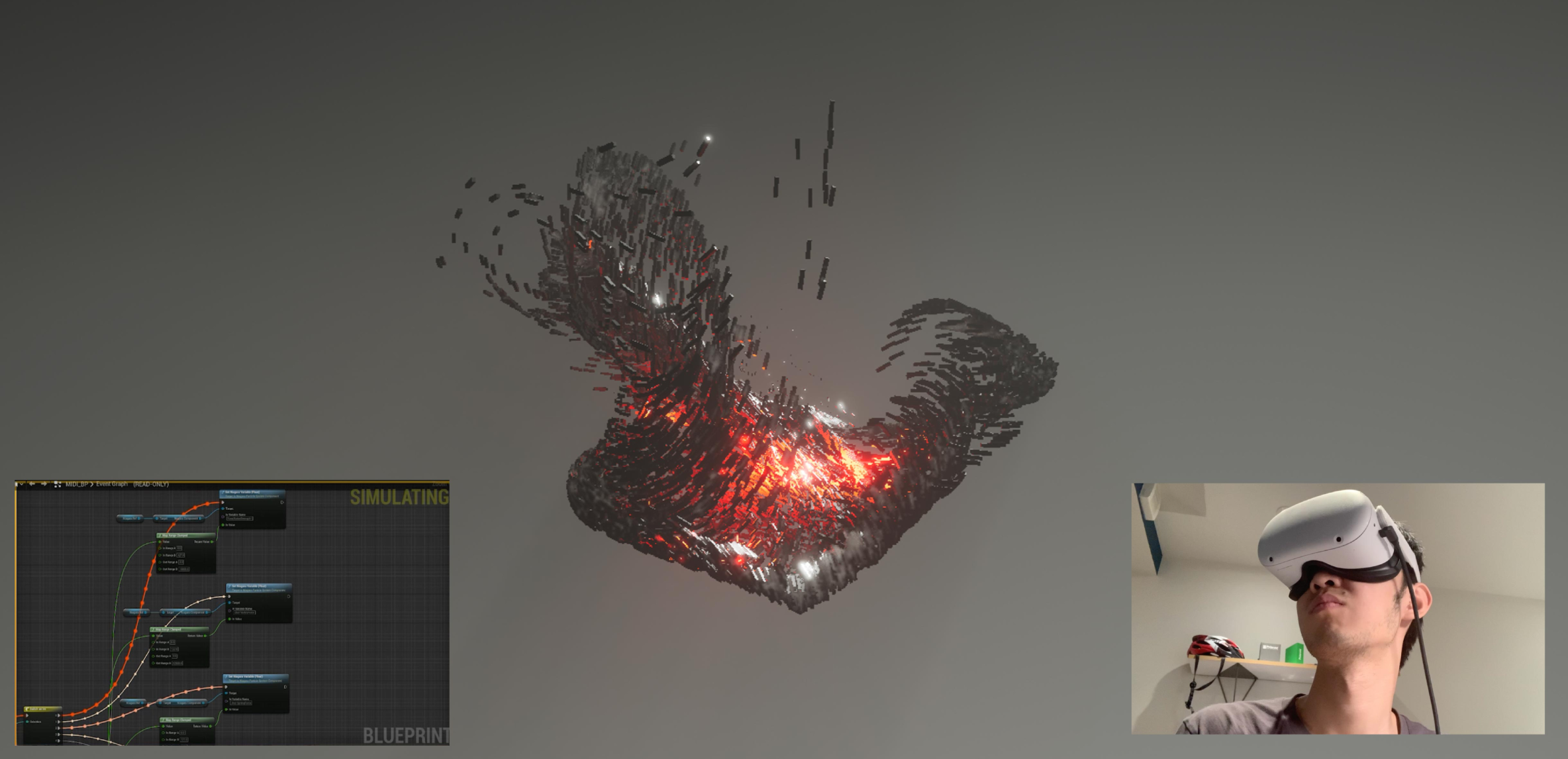

The idea came to me while experimenting with UE’s Niagara particle system and listening to music. I used an audio analyzer to break down the rhythm and linked different audio features to trigger specific visual effects. I connected these audio parameters to my MIDI controller and programmed Unreal to map key system controls to create dynamic visuals.

-- Prototypes --

In UE, I created multiple particle systems that were dynamically controlled by various parameters. Force fields drove the particle systems, and their parameters were linked to MIDI sliders and music rhythms. This setup allowed me to build an interactive VJ system.

-- Final --

-- Next Step --

"There's a lot more potential with this concept. Using animation sequences, it’s possible to create real-time, interactive dance performances with virtual characters, where movements can be triggered by factors like rhythm and beats, or MIDI slides and buttons. For prototyping, I sculpted a furry character and created a default dance sequence. I used the motion blending feature in UE to combine different skeleton animations and generate new moves. If you have any suggestions or ideas, feel free to reach out!